Continuing on my journey up the Azure Automation mountain, I recently completed a simple AZ PowerShell script that takes several input parameters and scales UP or scales DOWN a given AzureSQL database instance depending on what time of day it is. Before I go any further, if you are just getting started in Azure Automation, […]

I haven’t posted in ages due to being generally slammed with work but this little piece I threw together was too good to forget about so I wanted to put it down. If you work with a larger owncloud deployment and have a lot of users and allow file sharing, you may be curious to […]

Without going into great technical detail (which on this topic I couldn’t do anyway), it seems after much reading that it is a recommended practice to spread you SQL Server TempDB across multiple files based on how many cores (or perhaps threads) your processor has. To keep things simple, let’s say I have a 4 […]

I have been fiddling about with setting up a SQL Server 2012 Failover cluster using an Equallogic SAN. After a whole lot of digging about I found two different posts on two different sites which got me about 90% of the way there. However there were some key “gotcha’s” and other information that was missing […]

SQL instances running a lot of databases can get a bit confusing as to what is kept where. Especially if said instance was setup by someone else in times prior. To that end, there is a very handy query you can run in SSMS to quickly return the on-disk file locations of all SQL data […]

This is the final article (I think…) on MySQL database backup. In my previous article I discussed how to craft a very efficient script for automated LOCAL backup of your MySQL databases. But that isn’t the whole story. What happens if your server burns to the ground… or more likely the hard drive gives up […]

Earlier this year I wrote an article about creating scripts for backing up your MySQL databases. A week ago I wrote another article about automated and secure root user login to your root MySQL database account. I have also been learning Python on and off and learning quite a bit about variables and loops. While […]

If you are looking for a quick way to install a LAMP (Linux, Apache, MySQL, PHP) stack on an Ubuntu server, this should take care of you: sudo apt-get install tasksel sudo tasksel install lamp-server mysql_secure_installation First command installs tasksel… which is a really handy program.

Do you work with MySQL? I do… quite a bit. Do you often script stuff on your server to make your life easier? I do that as well… quite a bit… Are you including your database user account and password (or worse… your mysql instance root user account and password!) in plain-text in your script… […]

As I am now hosting about 8 different sites I was wanting to make restoring from backups a bit easier and less manual. The ultimate goal is to login to my second server and have it up and running with as few key-strokes as possible. Scripting was the answer. It took me several hours of […]

If you haven’t gotten around to setting up regular backups of your website MySQL databases you are asking for serious trouble. In this article I am going to provide shell script examples that you can use to quickly setup database backup jobs. As far as scope goes, we are going to be talking about how […]

This tutorial assumes you have some experience with Linux administration although I try to hand-hold as much as possible. If you are on a shared hosting solution and don’t have root access, you can easily modify the shell script we will create later to target your user’s home directory on the server. You also need […]

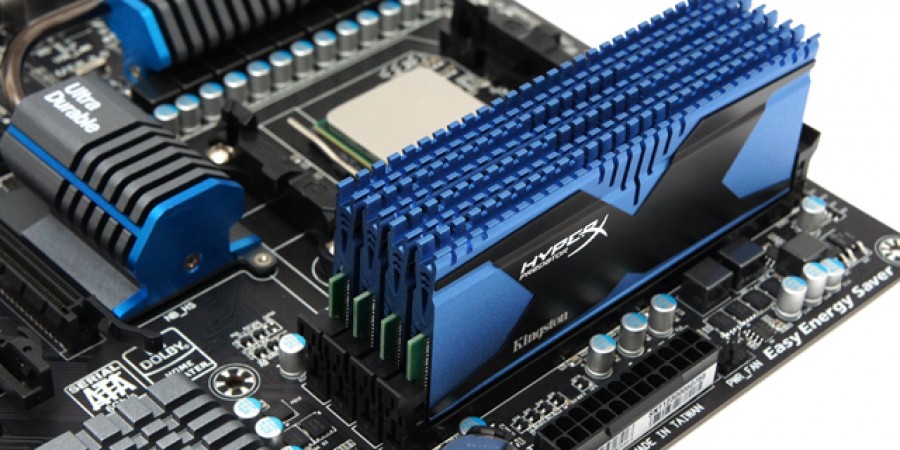

What is a Ram Disk you ask? Simply put, you carve out a piece of your system’s RAM and use it as a normal file system. But you probably have some more questions… Why would I want to do this? Simply put, RAM is very fast. Faster than most (any?) SSD drive. So if you […]

Working on some performance tuning for MySQL today. Here are by far the best resources I have found: Best General Tuning Guide: http://www.mysqlperformanceblog.com/2006/09/29/what-to-tune-in-mysql-server-after-installation/ Expanded point from that Guide: http://www.mysqlperformanceblog.com/2007/11/03/choosing-innodb_buffer_pool_size/ I ran into an issue when I adjusted a log file size (MySQL wouldn’t start), this was the fix: http://dba.stackexchange.com/questions/1261/how-to-safely-change-mysql-innodb-variable-innodb-log-file-size In my case MySQL was running […]